Stitch just launched, offers to use AI to design any app/website for you. Exciting! I tried it out on a real-world task, and compared it to the off-the-shelf AI-driven options that already exist today – is Google’s product a huge step forwards? Is it worth using? If not, what should we use instead?

Concrete example

This current website needs a lot of (re-)design work. I launched it 2 years ago with a quick and simple design, just enough to put the site live, planning to come back and do it professionally later. But of course I never got around to it.

So let’s use AI to (hopefully) design a much better site in a few minutes, and see what happens.

Actual prompt:

I wrote a minimal effort, generic prompt – should be good-enough for an LLM:

“modern site promoting an individual as a thought leader and public speaker, available for paid interim engagements, and showcasing their many blog posts and a small number of their conference talk videos”

Expectations:

- Using any LLM-based AI to solve this should be very “forgiving”. They only need the vision and they’ll extrapolate from there; details help them but they don’t need handholding/babysitting

- Google’s new product was launched this week with lots of fanfare; until last week Google’s own LLM (Gemini 2.5-pro) was the best performing modern AI/LLM around – so we have high expectations for a Gemini-powered UX design AI site.

- Competitors have been on the market for 18 months, and about 4 months ago they went from “OK” to “really good”. Google has a lot of catching-up to do here.

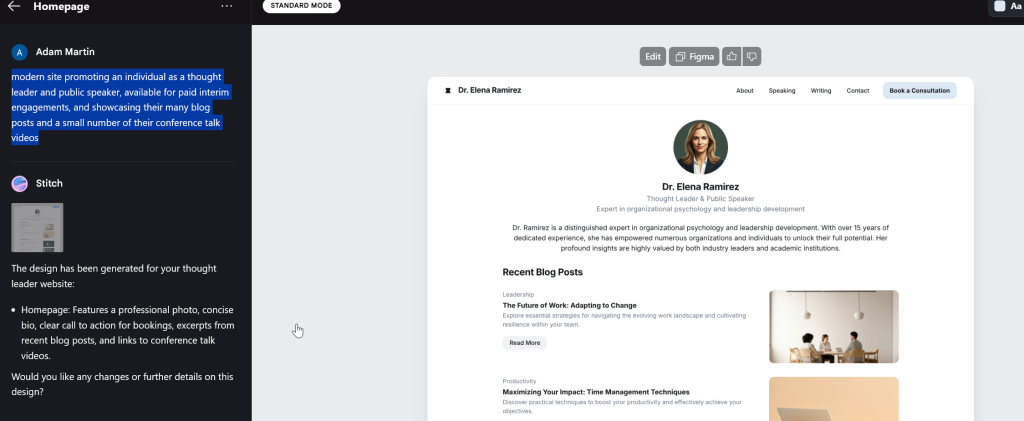

Option 1: Google Stitch

Site: https://stitch.withgoogle.com/

Configuration/setup:

I used the plain prompt, and their “Standard” settings

Output:

Evaluation

This is terrible. I haven’t seen design this bad in almost 20 years.

I tried an ongoing conversation with Stitch’s AI to improve this — I said the typography, the layout, the color scheme were all wrong. It claimed to fix all of them … and then output almost the exact same design as first time (not even worth attaching the screenshot, it’s almost identical).

It also took well over a minute to generate the image. A long dull wait.

Output: a plain image that you can’t interact with (you have to use the other Stitch features to export this into something useful you can interact with). The big interesting feature here is ‘export to Figma’ – although most individuals won’t benefit from it it could be good for teams, in theory (Figma is a complicated and powerful design app for professionals, but non-designers struggle to use it effectively).

Option 2: MidJourney + Stitch

Idea: Use Midjourney (top-tier AI image generator) to create an image, then use Stitch to convert that to an actual website design.

Site: https://www.midjourney.com/

Midjourney has been live for 3 years producing extremely high quality visuals using diffusion-based AI (i.e. it does *not* use LLMs). Diffusion tech is fast and great for images but very difficult to control, it only reads keywords in the input, it cannot understand sentences or meaning – it has none of the ‘intelligence’ of LLMs (it predates ChatGPT).

Configuration/setup:

I had to modify the prompt – my original prompt was lazy, off-the-cuff, quick description I’d put no thought or effort into. That’s fine for LLMs, but for a stable-diffusion AI I knew I’d need to simplify and clarify a bit. But let’s see what happens with the naked prompt (identical to stitch). MidJourney always creates 4 images for each input, letting you explore different interpretations quickly, so here’s the thumbnails for our initial prompt:

Evaluation

… oh dear. Note that it failed to understand the key word “site” and the implied “design a website” brief.

But this is what we expected. So … let’s give it a more ‘diffusion friendly’ prompt.

I’ll prepend the text: “ui ux webdesign layout” — this is a fairly common way to prod MJ into giving you a webpage or similar:

… well, sort-of. MJ loves to make beautiful images, that’s what it’s tuned for by the vendor. But we want something that Sitch won’t misinterpret – we ideally want a screenshot of the website, not a photo of a laptop. Typical approach with MJ: add “landing page” and remove as many words as possible.

Note: since MJ doesn’t understand meaning/semantics, there’s no value to including words like “of” and “and” — it effectively discards them. This is unlike ChatGPT/LLMs which use every word in the sentence to understand the overall meaning. If we remove distracting words, MJ will get closer to our intent.

… not ideal — with a little more effort you could get MJ to output only the website design, without the laptop/office/etc. But for a ‘I’m being lazy’ test this is good enough.

Now I crop some of the images and pass them to Stitch:

Cropped image (this is cropped from the third thumbnail in the second row of images above):

…let’s take it to Stitch

Discovery: Stitch defaults to ‘I suck’ mode

The button for using an image prompt in Stitch is broken – when you click it you get a popup saying you need to login to your Google account. And when you login to google and refresh … the image button vanishes. Huh?

It turns out: Stitch has a “Standard” mode that doesn’t use Google’s current AI (it uses an outdated downgraded version), and an “Experimental” mode that uses the real AI/LLM. I guess to save money … Google defaults to ‘Standard’ mode, and only people who’ve logged in to their google account can even see/use the real AI-driven “Experimental”.

As a user I don’t see why you’d want to use “Standard” mode. It should not be the default.

This means of course that we need to re-do our original attempt – for a fair comparison, we need to use Experimental mode with the original prompt, and then also use Experimental mode with the MJ image as inspiration

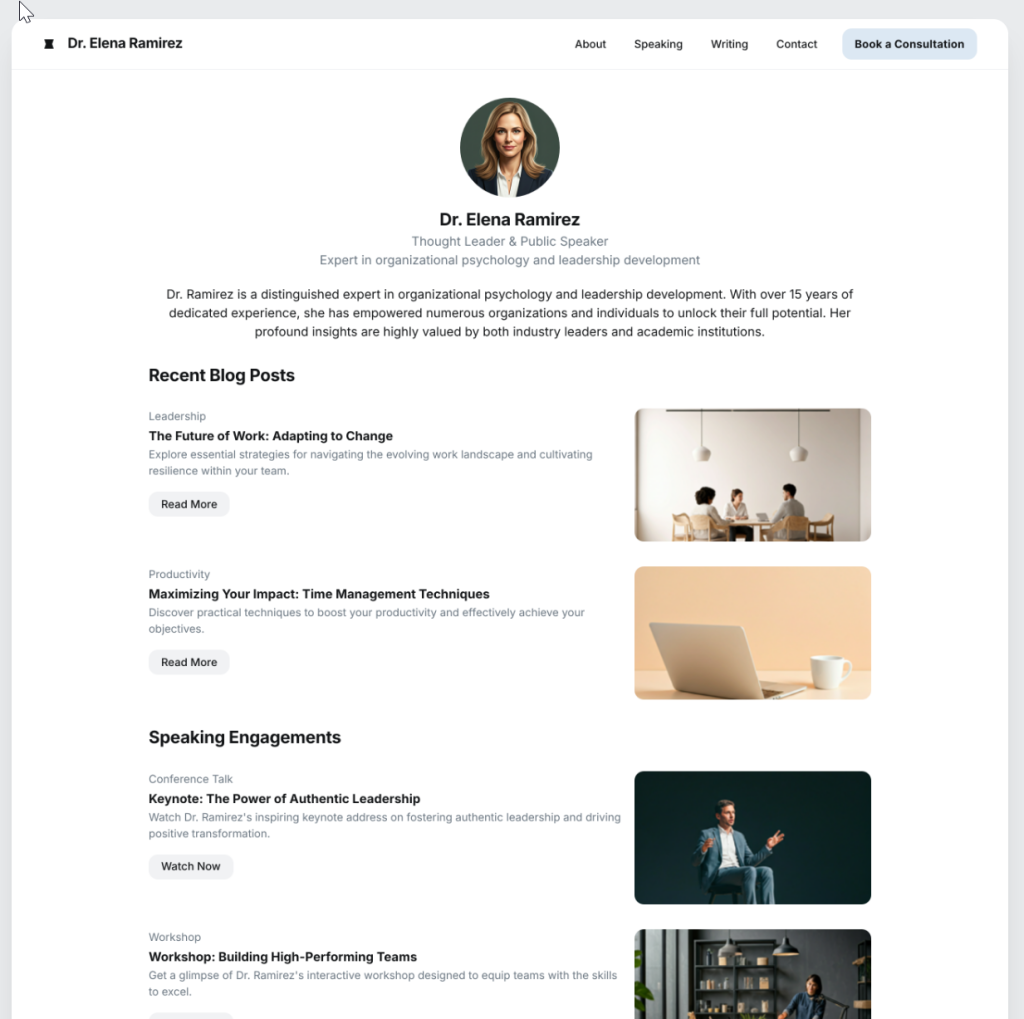

Option 3: Stitch … but using the real AI this time

Configuration/setup:

- Login to a google account

- Visit stitch.withgoogle.com

- Select “Experimental” from the dropdown in top right of the page

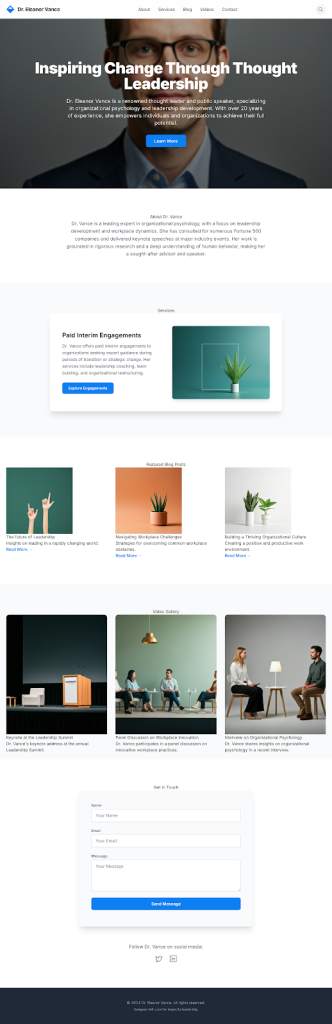

Evaluation

This is much better – an actual real website this time, instead of a weird mess.

It took 2-3 minutes (!) to generate, which felt like forever.

The design is OK but disappointingly basic. The market leaders (e.g. Replit.com – basically the same service as Stitch) were doing the same-or-better quality as this 1 year ago. But at least it’s useable!

Option 4: Stitch with MidJourney, take 2

Configuration/setup:

- (previous work): use Midjourney to generate an initial “screenshot”

- (previous work): login to Stitch and get it into “Experimental” mode

- Attach the image to the prompt box

- Copy/paste the original prompt in <– required, Stitch won’t let you submit without some text in there

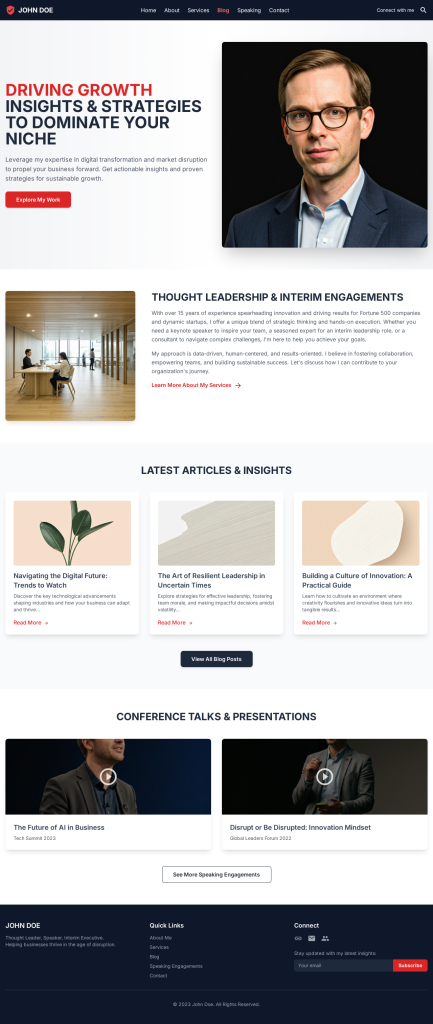

Evaluation

It’s much better – by using MidJourney to do the hard work. Stitch produced something quite good.

Note that it’s lost / misunderstood most of the design language, so you’d still have to do a lot of manual work if you wanted to preserve details of the original MJ design.

Option 5: MidJourney + Cursor

Idea: If MJ is doing the hard part – creating the design – and we’re using an external site (Stitch) to convert that to editable image/code … why not use the market-leading software that already exists for that? We’ll take Cursor (an IDE for programmers that is pre-integrated with AI at its core) and give it the image and ask it to do all the work automatically, in its “Agent” mode.

Site: https://www.cursor.com/en

Configuration/setup:

“Programmers” and “IDE” may sound complex and offputting but in reality you don’t need to know how 99% of it works – you can just use it as an AI-driven coding system. Anyone who’s used an IDE will now how to use it for real coding (it’s based on Microsoft’s VSCode IDE), but we’re not going to bother.

- Install cursor

- Create a devcontainer (Microsoft tech: encapsulates a project and all the dependencies in an isolated sandbox. Doesn’t install anything on your desktop/laptop, doesn’t mess up your Windows install, and is very easy to convert/upgrade into a live website hosted using standard tech on Azure/GCP/AWS)

- Give Cursor a custom prompt + image

- Put Cursor in ‘Agent’ mode, where it uses Agentic AI to go do all the work for you

Modified prompt

For Cursor to work, it needs to know what to do – so we give it a tiny bit of preamble. We don’t bother with the original prompt, that’s ‘assumed’ as part of the MJ image.

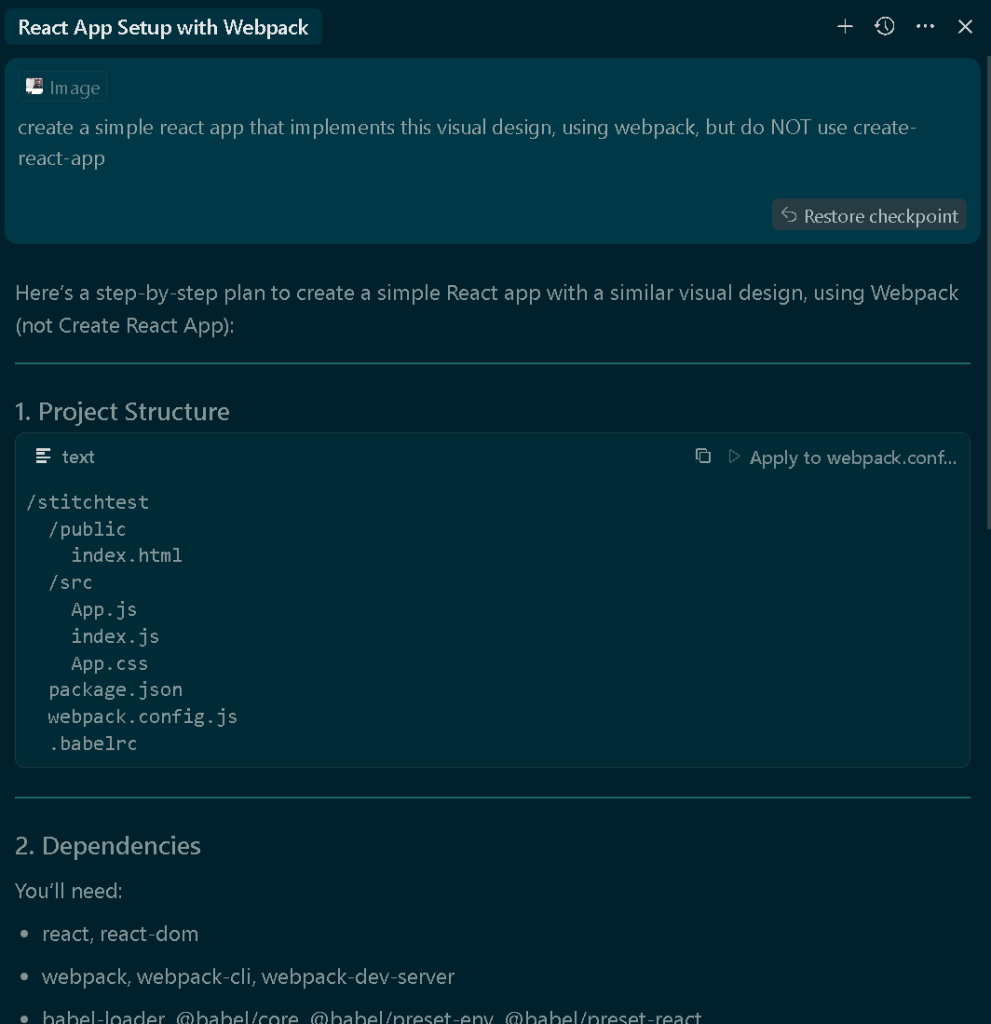

“create a simple react app that implements this visual design, using webpack, but do NOT use create-react-app”

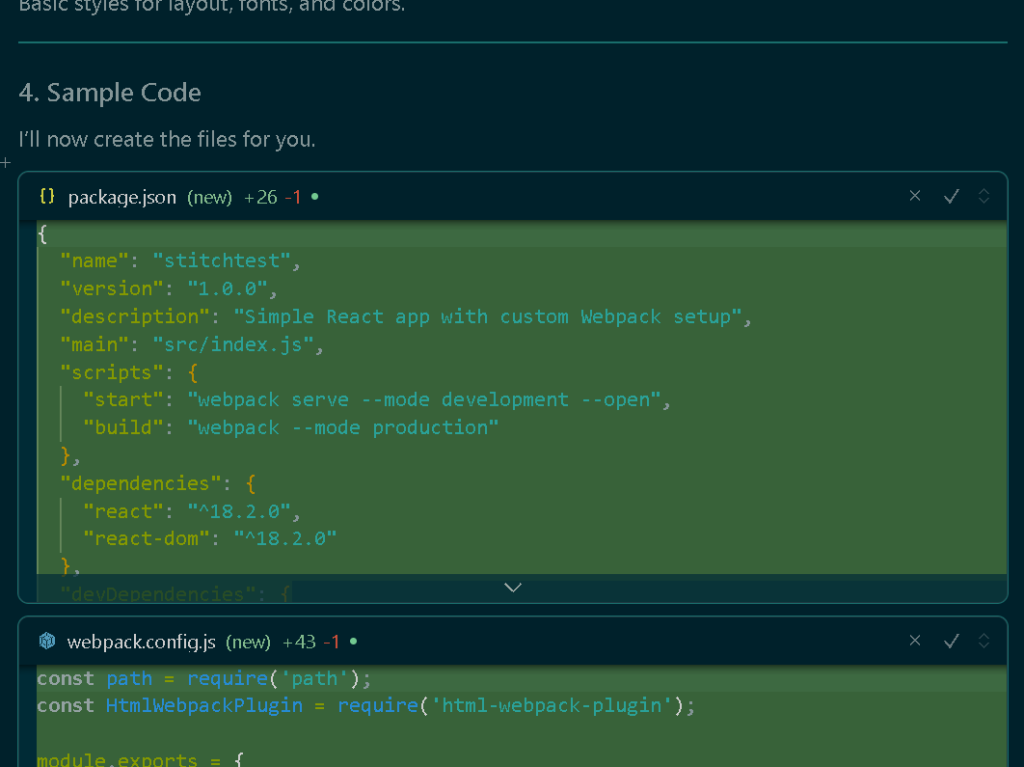

Cursor Agent in action

… Cursor now runs ahead automatically, editing the project, writing code, tweaking the design …

… and 1-2 minutes later gives us a complete project + instructions on how to load it in a web-browser:

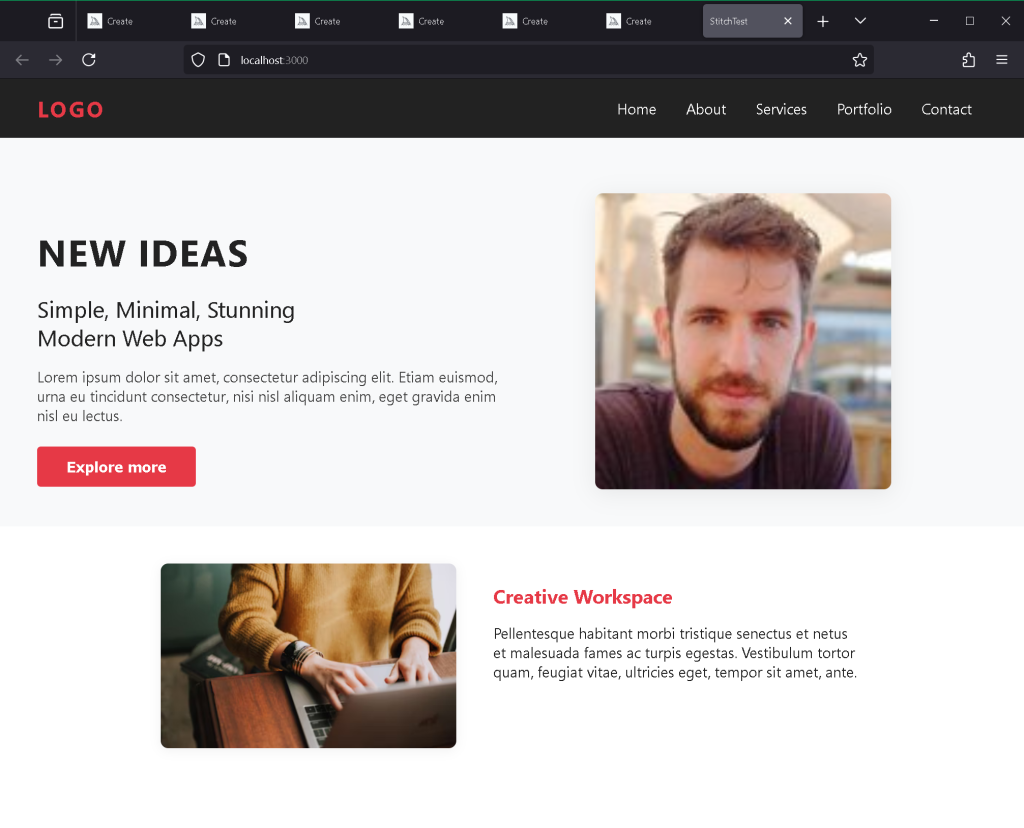

… opening in a web browser gives us:

Evaluation

It’s like Stitch, only faster, better quality, it’s already a full website (don’t have to ‘edit’ or ‘export’), and it’s signficantly closer to the design brief. It’s not perfect – it’s an interpretation – so we’d still need to do some work if we wanted to stick to the exact details of the MJ design.

But it has no way to export to Figma.

Conclusion: Stitch is disappointing, isn’t exciting … yet

My example was a website, but the same is true for app design – with the bonus that if you’re using Cursor (or whatever AI-connected IDE you’ll build your app with) then you’re already editing the real app, instead of using Stitch as an intermediary stage.

Figma integration is a highly desired feature – but now I’m much more interested in what Figma themselves are doing in this space, what their own internal AI teams are up to. I think it will be better than this.

I had expected a lot more here from Google. This was their big announcement, and instead of jumping ahead of the competition they seem to have fallen further behind: off-the-shelf established options outperform Google’s best — and Google’s default offering (‘Standard’ mode) is just … really bad.

There’s a chance that this was released too soon and it’ll get better. But unfortunately Google’s history is the opposite: for 296 (!) previous examples see https://killedbygoogle.com/ which documents things Google launched then killed.

So let’s hope something big improves. But don’t hold your breath.

In the meantime, I recommend:

- Midjourney to generate designs + Cursor: convert them to full, 1st class, working apps (or websites)

- Any of: Replit, Lovable, or Bolt: basically the same as Stitch, they use AI in a website to generate complete webapps